Search across updates, events, members, and blog posts

The most important AI news and updates from last month: Dec 15, 2025 - Jan 15 2026.

✨ Updated on Jan 20.

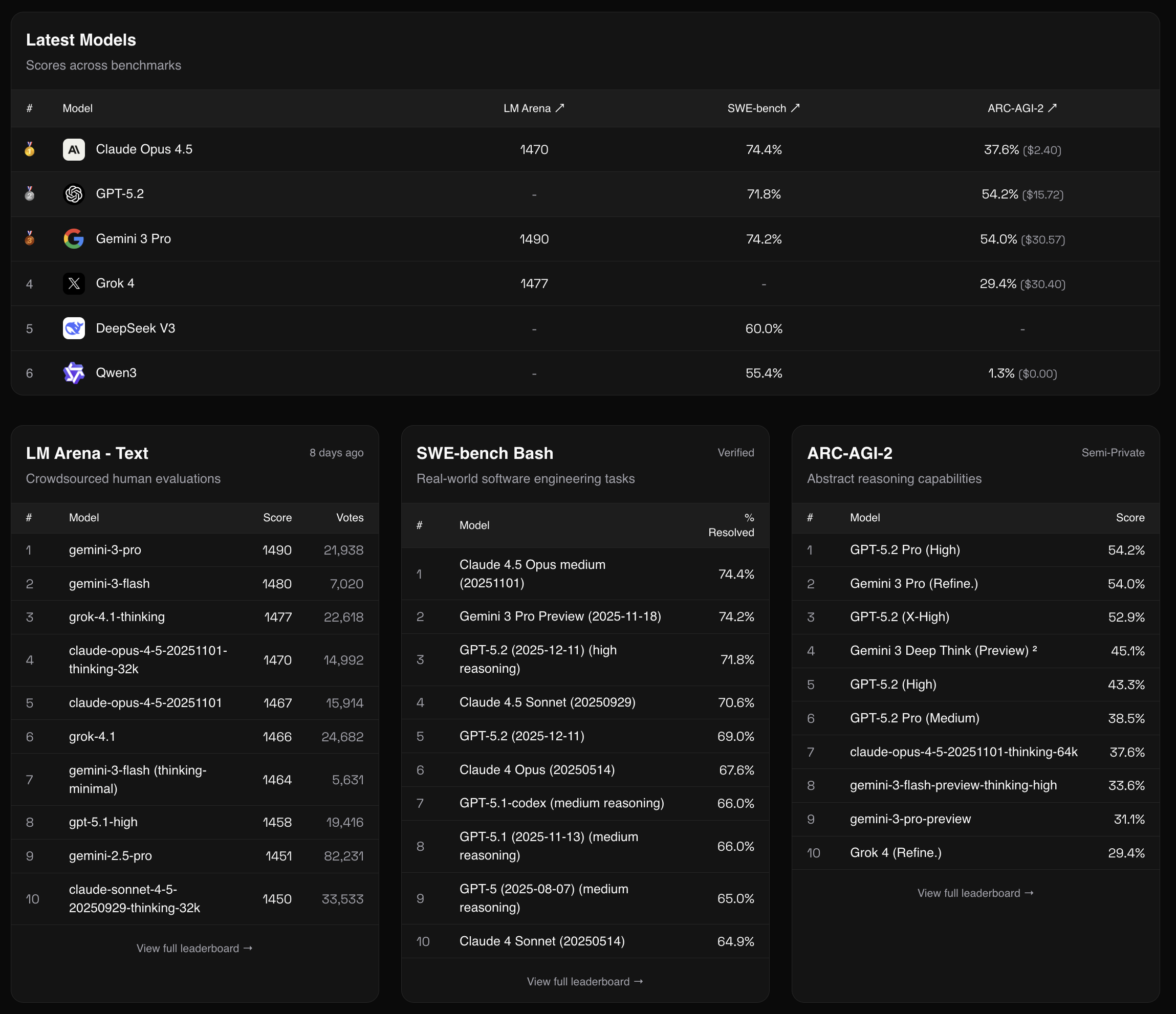

Link: aisocratic.org/leaderboard

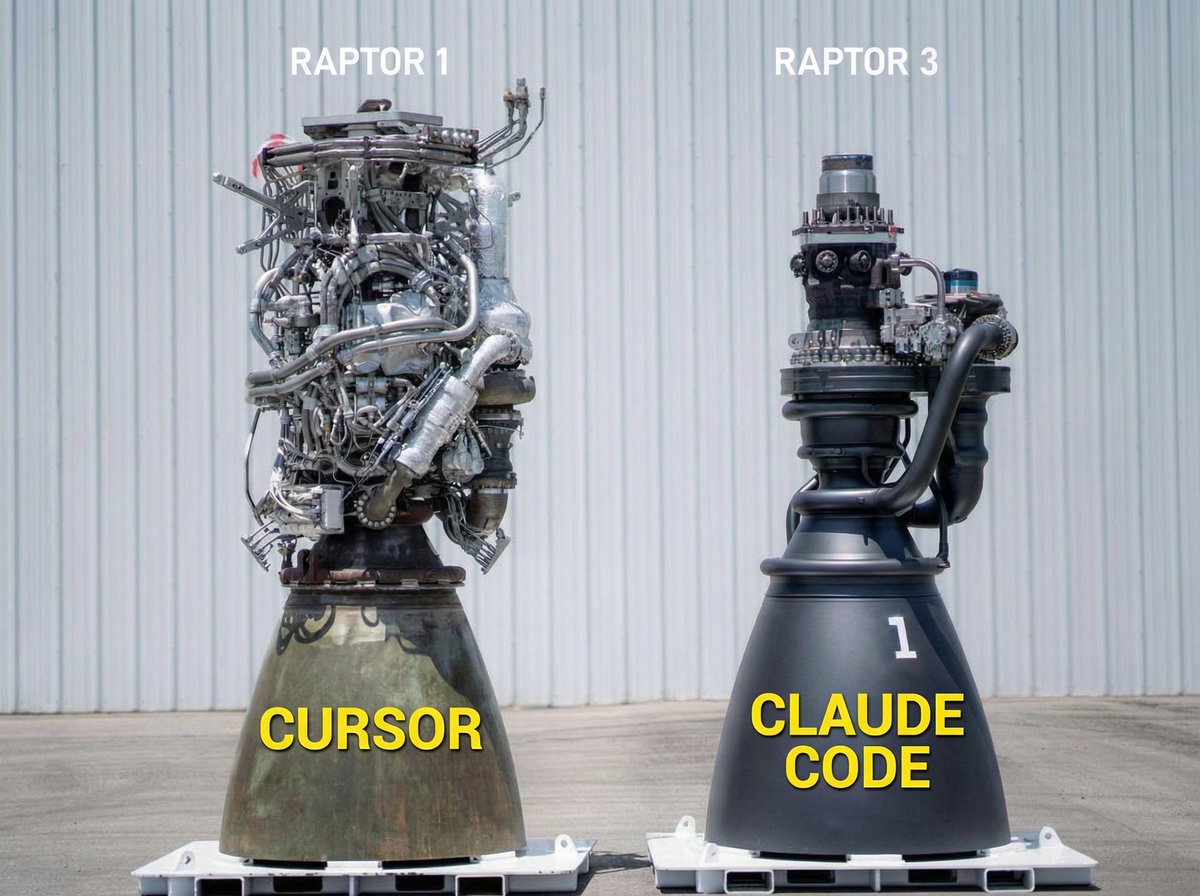

I went personally went from 80% of my code being AI via copy/paste to now being 100% via Claude Code. Coding has officially changed for good!

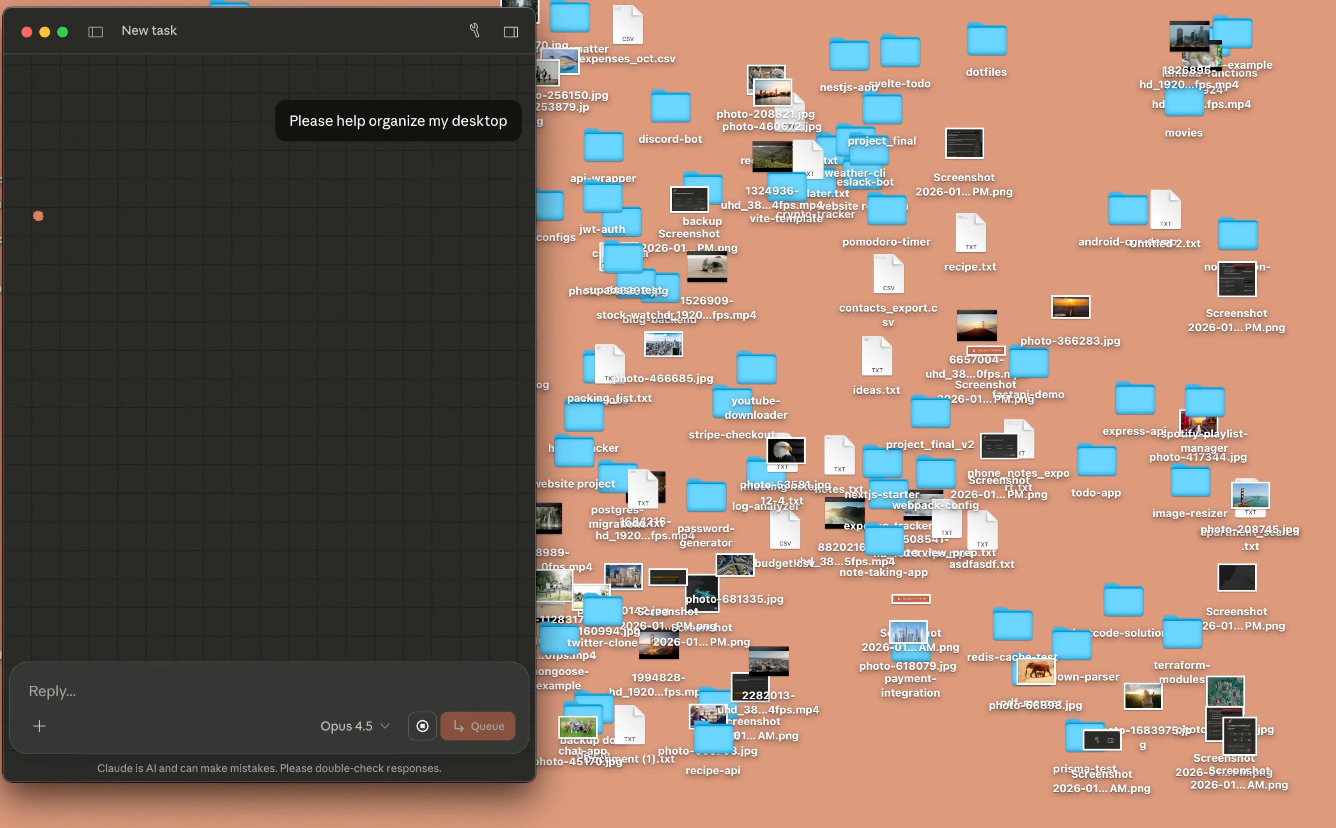

If you recall Dario Amodei mentioned AI covering 90% of coding by end of 2026, turns out we reached 100%. Claude Code was released in May 2025, as a side project, with last month Anthropic released Opus 4.5, it's now arguably the most productive software ever made. OpenAI AGI definition is about an AI that "outperform humans in most economically valuable work", are we there already?

Claude just added a Chrome extension and I argue is the most productive tools ever. Not only your CLI gets eyes, it also can automate workflows that don't have an API.

There's now have an official plugin marketplace. One plugin got extra attention ralph-wiggum, which pretty much is an infinite loop over a task until done:

while :; do cat PROMPT.md | claude ; done

...

Concurrent agents ran uninterrupted for one week. Each agent had a role: planner, worker, judge. According Cursor blog post GPT 5.2 is better at long-running tasks than Opus 4.5. Adding more management hurts performance, lol. Turns out, scaling agents looks a lot like scaling companies https://x.com/Yuchenj_UW/status/2011863636042469866.

Forget everything you knew about video making. This type of content will become increasingly common in 2026. The difference is who understands the process and comes out in front. This is the kind of skill that will separate those who only watch AI... from those who use AI to create something that attracts attention How to render this videos: https://www.instagram.com/p/DTaNGPkEYBd. [/col]

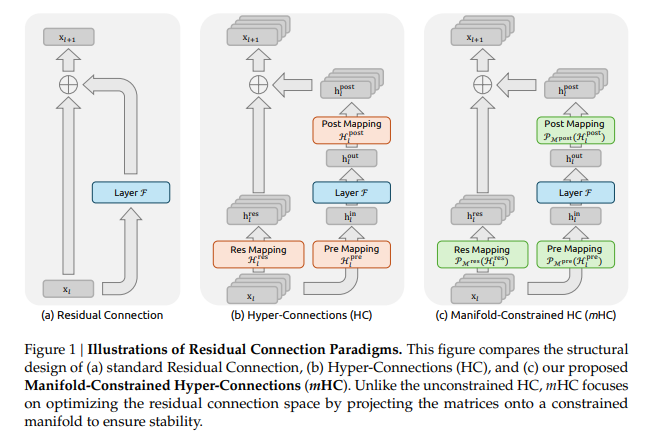

This is a new banger paper from DeepSeek!

Traditional residual connections (e.g., in ResNets and Transformers) add the layer output to the input, preserving an "identity mapping" that enables stable training in very deep networks. Hyper-Connections (HC), a more recent idea, expand this by widening the residual stream (multiple parallel streams instead of one) and using learned mixing matrices for richer information flow and better expressivity. However, unconstrained HC breaks the identity property, leading to severe training instability (exploding/vanishing gradients) and high memory overhead, limiting scalability.Core Innovation: mHCmHC fixes HC by projecting the mixing matrices onto a specific mathematical manifold — the Birkhoff polytope (doubly stochastic matrices, where rows/columns sum to 1). This is achieved efficiently using the Sinkhorn-Knopp algorithm (an iterative normalization from 1967, ~20 iterations suffice).Key benefits:

Efficiency OptimizationsDeepSeek added heavy infrastructure tweaks (kernel fusion, recomputation, communication overlapping) to keep overhead low (~6-7% extra training time).ResultsExperiments on models up to 27B parameters show:

In essence, mHC makes a theoretically superior but previously impractical idea (wider, diversified residuals) viable at scale, potentially unlocking new ways to improve LLMs beyond just more parameters or data. It's seen as a fundamental advance in topological architecture design, with community excitement around implementations and combinations (e.g., with value residuals). The original X thread you linked is a fan announcement hyping it as a "huge model smell" breakthrough.

Sources:

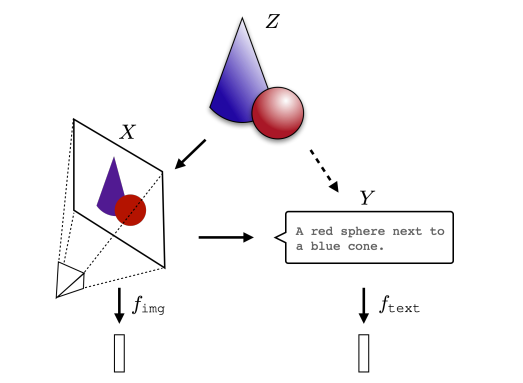

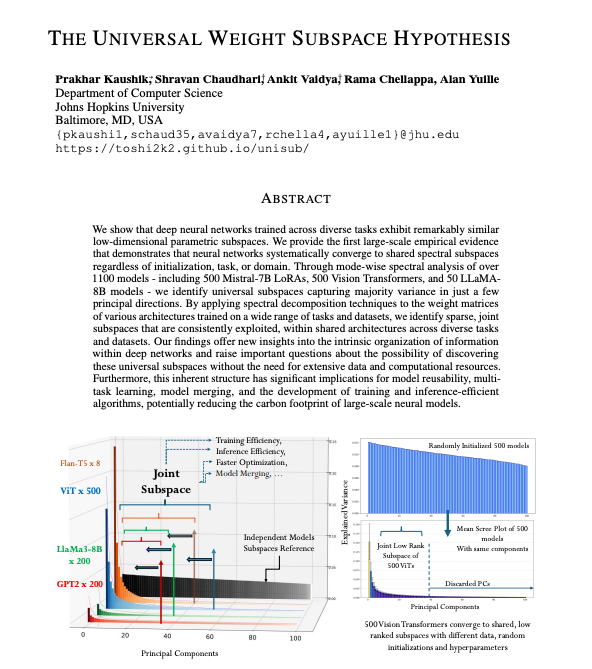

Neural networks, trained with different objectives on different data and modalities, are converging to a shared statistical model of reality in their representation spaces. Vision models, language models, different architectures are all slowly approximating the same underlying model of reality.

If this holds up, it's a huge unlock. We could translate between models instead of treating each one like a sealed black box, reuse interpretability wins across systems, and maybe align models at the representation level, not just by policing outputs.

Johns Hopkins University reveals that neural networks, regardless of task or domain, converge to remarkably similar internal structures.

Johns Hopkins University reveals that neural networks, regardless of task or domain, converge to remarkably similar internal structures.

Their analysis of 1,100+ models (Mistral, ViT, LLaMA) shows they all use a few key "spectral directions" to store information.

This universal structure outperforms assumptions of randomness, offering a blueprint for more efficient multi-task learning, model merging, and drastically cutting AI's computational and environmental costs.

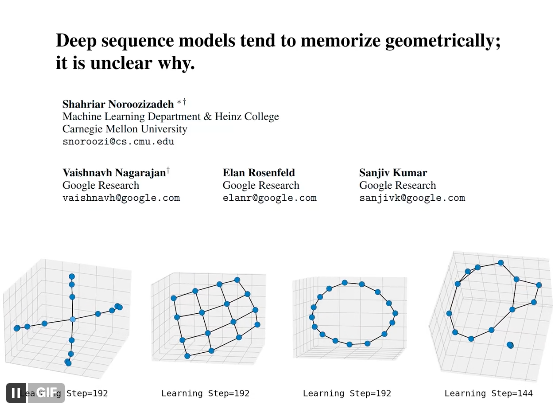

We found that deep sequence models memorize atomic facts "geometrically" -- not as an associative lookup table as often imagined.

This opens up practical questions on reasoning/memory/discovery, and also poses a theoretical "memorization puzzle."

We found that deep sequence models memorize atomic facts "geometrically" -- not as an associative lookup table as often imagined.

This opens up practical questions on reasoning/memory/discovery, and also poses a theoretical "memorization puzzle."

The crazier implication is philosophical. Maybe MEANING isn't just a human convention. Maybe there are natural coordinates in reality and sufficiently strong learners keep rediscovering them.

So what's actually driving the convergence? The data, the objective, some deep simplicity bias? And where does it break?

Sources

The insane machines that make the most advanced computer chips from Veritaseum.

Other videos and pods from Dec and Jan:

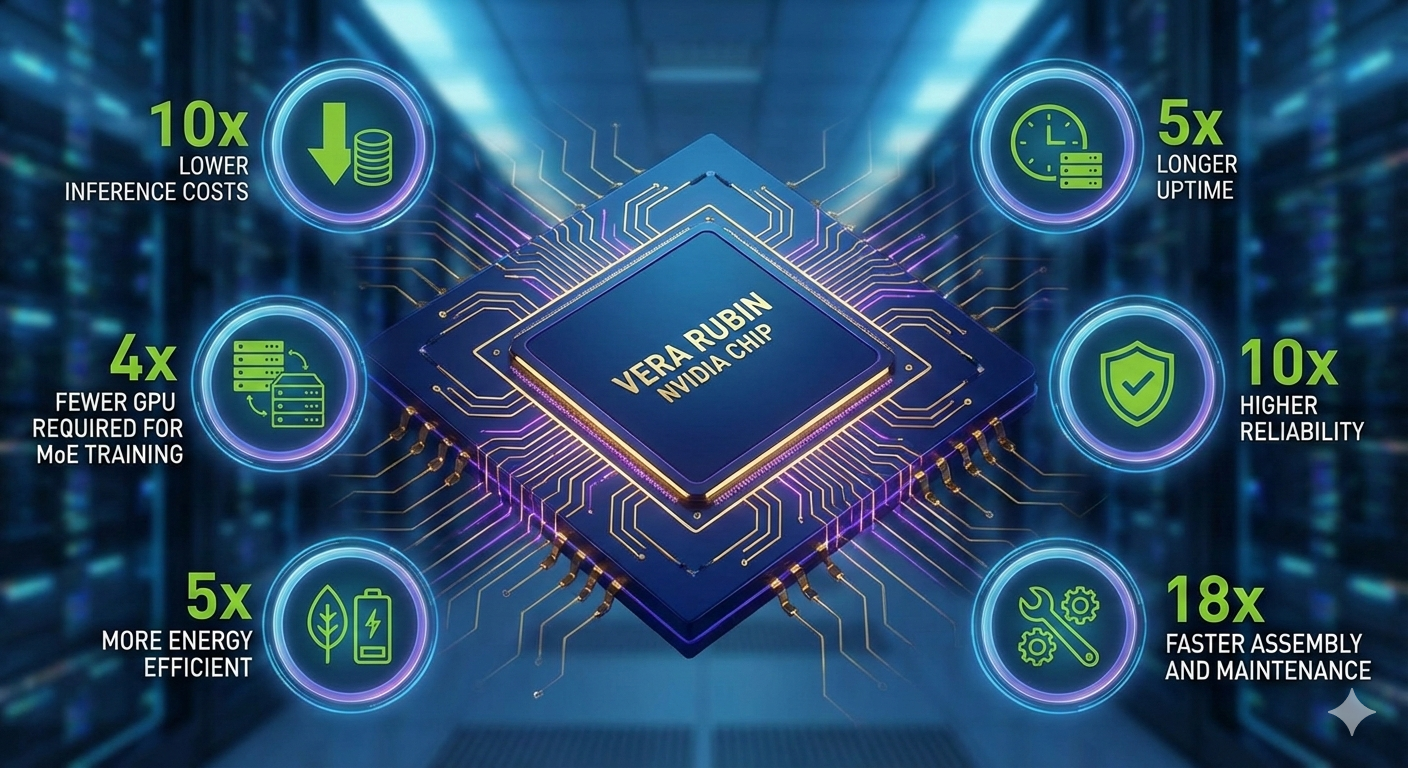

Nvidia launched a new chip: Vera Rubin. Here how it compares to its predecessor Blackwell:

sources: https://x.com/nvidia/status/2008357978148130866

sources: https://x.com/nvidia/status/2008357978148130866

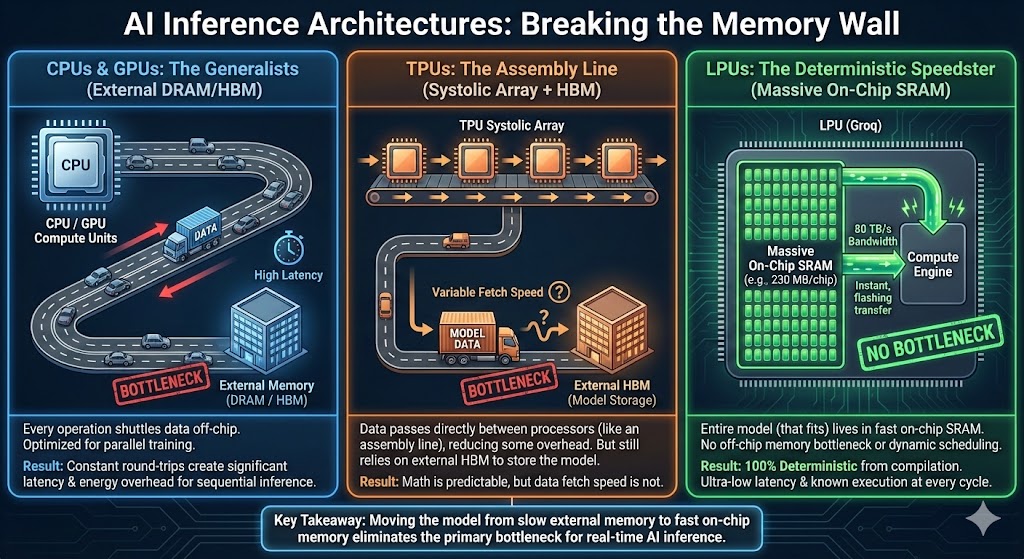

ℹ️ You guys may have noticed a trend in the recent AI acquisitions: they hire the founder + few leadership position and leave the rest of the team in a zombie company, this happened with Meta > Scale, Google > Windsurf, and now NVIDIA > Groq and Meta > Maus. So why is that? Well it turns out this solution avoids antitrust scrutiny.

Groq has entered into a non-exclusive licensing agreement with Nvidia for Groq’s inference technology. Groq Cloud will continue to operate without interruption. This blog post breaks down the antitrust loophole that enabled NVIDIA to close this $20B deal: https://ossa-ma.github.io/blog/groq.

Nvidia acquired:

Nvidia explicitly did NOT buy:

Jonathan Ross built TPUs at Google, then started Groq to build LPUs. LPUs is a new architecture that optimize for inference and Jonathan is a strong leader in the industry.

Meta just bought Manus, an AI startup everyone has been talking about https://x.com/TechCrunch/status/2005876896316469540.

Note: our mission is to democratize AI via open source knowledge and decentralization. With that in mind our community tries to share objective views.

United States capturing the Venezuelan president Maduro has large implication in geopolitics. Peter Zeihan, one of my favorite geopolitics expert https://youtu.be/ddojVgGAryQ?si=nfpK2_JZNnjt334Q explains how by taking Venezuela, the US is showing a clear expansionist plan, following the Monroe Doctrine.

Right after this attack, president Xi Jinping has pledged to achieve "reunification" of China and Taiwan link: https://www.aljazeera.com/news/2026/1/1/chinas-xi-says-reunification-with-taiwan-unstoppable.

TSMC produce 90% of the world semi, from Nvidia GPUs, to iPhone chips, cars chips, and even defense systems. An invasion could halt production causing an economic shock — not surprised if this event burst the AI bubble. At the moment the “Silicon Shield 🛡️” deters any attack, since everyone needs those chips. This might change, according Reuters, China built a prototype extreme ultraviolet lithography (EUV) machine in Shenzhen https://x.com/Megatron_ron/status/2001637940988899683.

So the question is not if China will build their own EUV and CUDA but when. We know the US is trying to do the same, and possibly the EU too. Until then we believe Taiwan will remain shielded from attacks.

These interesting blog posts from SemiAnalysis.com can shine some light on TMSC:

Get the latest AI insights delivered to your inbox. No spam, unsubscribe anytime.

Founder, Engineer

AI Socratic

Founder of AI Socratic

Top AI updates from Jan 15 to Feb 15 2026

The most important AI news and updates from last month: Nov 15 - Dec 15. GPT-5.2, Opus 4.5, Gemini 3, the Agentic IDE Wars, Genesis Mission, and more.

The most important AI news and updates from last month: Oct 15 – Nov 15.